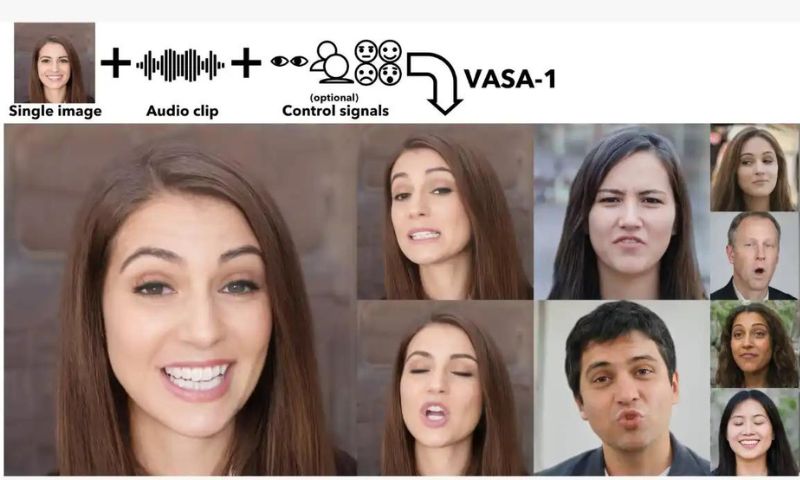

NEW YORK: Microsoft Research Asia has introduced VASA-1, an innovative AI application capable of transforming still images of people and audio tracks into dynamic animations, complete with synchronized lip movements and natural facial expressions.

The cutting-edge technology, detailed in a paper by the research team, marks a significant leap forward in the field of artificial intelligence, offering unparalleled realism and expressiveness in virtual character animation.

VASA-1 leverages advanced algorithms to generate real talking faces of virtual characters, enhancing the perception of authenticity and liveliness. By analyzing a single static image and a corresponding speech audio clip, the application creates holistic facial dynamics and natural head movements, capturing a broad spectrum of facial nuances.

“The premiere model, VASA-1, not only produces exquisitely synchronized lip movements with the audio but also incorporates lifelike facial expressions and head motions,” the researchers explained.

Key to VASA-1’s functionality is its ability to operate in a face latent space, enabling the generation of high-quality videos with realistic facial and head dynamics. The application supports real-time engagement with lifelike avatars, facilitating human-like conversational behaviors.

The core design principles of VASA-1 emphasize the development of an expressive disentangled face latent space using video data, ensuring optimal performance and seamless integration of facial and head movements.

“We are thrilled to introduce VASA-1, which represents a significant advancement in AI-driven character animation. This technology opens up new possibilities for immersive virtual experiences and interactive communication,” said the research team.